Evaluation Benchmark for Multimodal Models

Paper Title

MMMU: A Massive Multi-discipline Multimodal Understanding and Reasoning Benchmark for Expert AGI

Authors

Xiang Yue et. al.

Affiliations

IN.AI Research et. al.

Date

Nov 27, 2023

5Ws

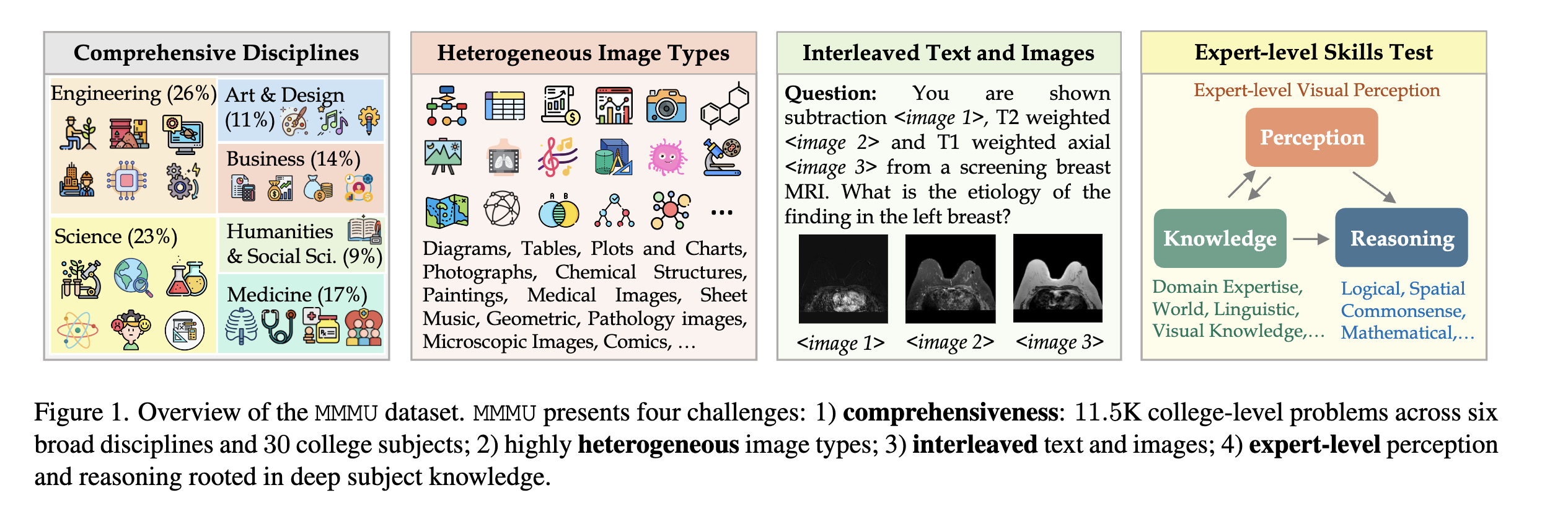

The paper "MMMU: A Massive Multi-discipline Multimodal Understanding and Reasoning Benchmark for Expert AGI" presents a new benchmark designed to evaluate multimodal models across a range of disciplines requiring expert-level subject knowledge and reasoning. Here's an analysis based on the requested criteria:

1. What is the problem?

The primary problem addressed by MMMU (Massive Multi-discipline Multimodal Understanding) is the lack of benchmarks that can effectively evaluate multimodal models (models that understand both text and images) across diverse disciplines at an expert level. Existing benchmarks often focus on common knowledge or basic reasoning, falling short in evaluating deep, expert-level domain knowledge and advanced reasoning skills.

2. Why is the problem important?

This problem is crucial as it addresses a significant gap in the assessment of artificial general intelligence (AGI) capabilities in AI models. By providing a rigorous and comprehensive benchmark, MMMU aims to stimulate advancements in multimodal foundation models, pushing them towards expert-level understanding and reasoning. This is vital for the development of AI systems that can operate across a wide range of professional and academic fields, mirroring the expertise of skilled adults.

3. Why is the problem difficult?

The problem is challenging because it requires creating a benchmark that spans a wide range of disciplines (like Art & Design, Business, Science, Health & Medicine, Humanities & Social Science, and Tech & Engineering) with college-level multimodal questions. These questions, often sourced from college exams, quizzes, and textbooks, are diverse and complex, requiring not only an understanding of text and images but also the ability to apply deep subject-specific knowledge and expert-level reasoning.

4. What are the old techniques?

Previous benchmarks and models have primarily focused on either text-based questions or multimodal tasks requiring only basic perceptual skills and common knowledge. These include models like LXMERT, UNITER, VinVL, Oscar, and datasets like VQA, TextVQA, OK-VQA, and GQA. These benchmarks and models are limited in their ability to assess expert-level understanding and reasoning across a broad range of disciplines.

5. Advantages and disadvantages of the new techniques?

Advantages

MMMU offers a more comprehensive and challenging benchmark that requires not only basic perceptual skills but also in-depth subject-specific knowledge and complex reasoning. It is designed for college-level multi-discipline multimodal understanding and reasoning, covering a wide range of subjects and image types, which is a significant step forward in evaluating and developing advanced AI models.

Disadvantages

The creation and maintenance of such a comprehensive benchmark are more complex and resource-intensive. The specificity and difficulty level might limit its applicability to only advanced models capable of expert-level reasoning and understanding. Additionally, the evaluation of such models may require more sophisticated and nuanced methods to assess their performance accurately.

6. Conclusion

In summary, MMMU addresses a significant gap in the evaluation of AI models' capability to handle expert-level tasks across various disciplines. It represents a crucial step towards developing AI systems with advanced understanding and reasoning capabilities akin to human experts.