2023-11-27论文解读

Paper Title

GAIA: A Benchmark for General AI Assistants

Authors

Grégoire Mialon, Clémentine Fourrier, Craig Swift, Thomas Wolf, Yann LeCun, and Thomas Scialom

Affiliations

- FAIR Meta (for Grégoire Mialon and Yann LeCun)

- HuggingFace (for Clémentine Fourrier and Thomas Wolf)

- AutoGPT (for Craig Swift)

- GenAI Meta (for Thomas Scialom)

Date

Nov 21, 2023

5Ws

The paper "GAIA: A Benchmark for General AI Assistants" introduces GAIA as a significant benchmark in AI research, specifically designed for evaluating General AI Assistants. Here's an analysis based on your request:

1. What is the problem?

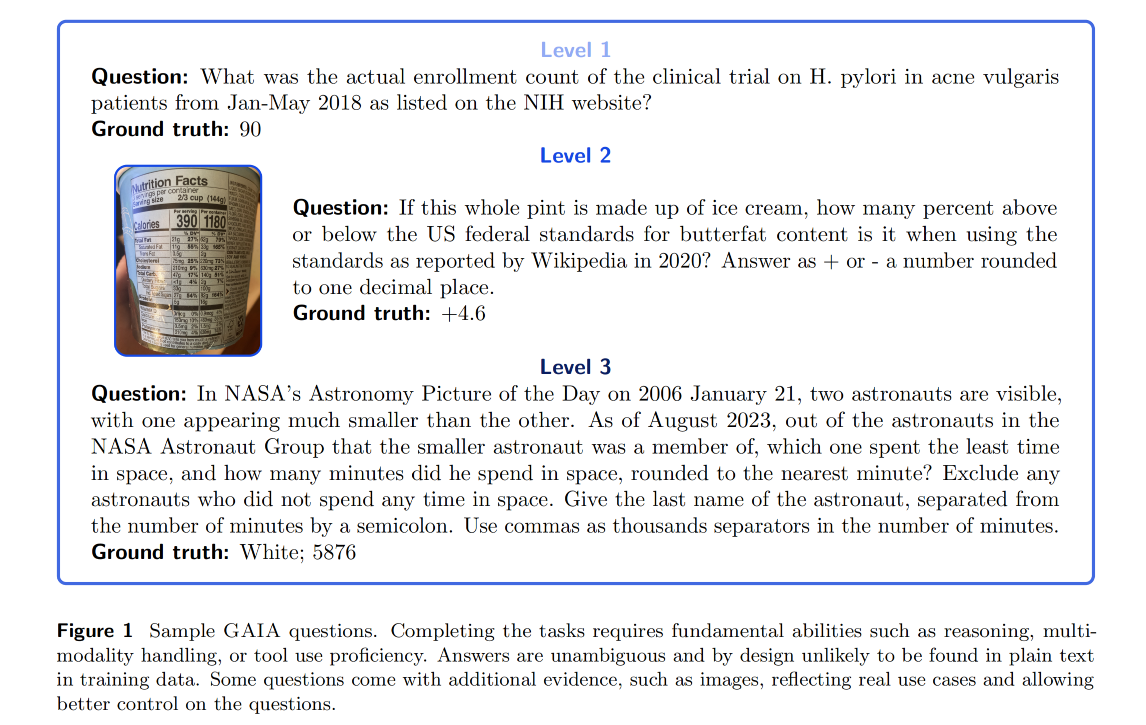

GAIA addresses the challenge of evaluating the capabilities of General AI Assistants. Traditional benchmarks often do not adequately measure the performance of these systems in real-world scenarios or in tasks that require a broad set of capabilities, such as reasoning, handling multimodal data, and web browsing.

2. Why is the problem important?

Evaluating AI systems, especially those intended as general-purpose assistants, is crucial for understanding their capabilities and limitations. Current trends in AI benchmarks focus on tasks increasingly difficult for humans but not necessarily challenging for AI systems. GAIA proposes a more balanced approach, targeting tasks simple for humans but difficult for AI, aligning with the path towards Artificial General Intelligence (AGI).

3. Why is the problem difficult?��

The difficulty lies in creating a benchmark that is both challenging for advanced AI systems and conceptually simple for humans. GAIA introduces tasks that require a combination of skills, including advanced reasoning, multi-modality understanding, coding, and tool use like web browsing. Crafting these tasks while ensuring they are rooted in practical use cases and not easily solvable by brute force or memorization adds to the complexity.

4. What are the old techniques?

Previous benchmarks often focused on tasks increasingly difficult for humans, operating in closed or synthetic environments, or lacked real-world applicability. They also tended to concentrate on specific domains like STEM or law, which advanced LLMs were already performing well in, thus not adequately challenging their broader capabilities.

5. Advantages and disadvantages of the new techniques?

Advantages:

- Real-World Relevance: GAIA's questions are rooted in practical scenarios, making the benchmark more applicable to real-world use cases.

- Comprehensive Skill Evaluation: It assesses a wide range of abilities, including web browsing, multi-modality handling, and reasoning.

- Conceptual Simplicity: Despite being challenging for AI, the tasks are simple for humans, making them easy to understand and evaluate.

Disadvantages:

- Resource Intensive: Creating and validating such complex questions is time-consuming.

- Less Focus on Specialized Domains: By not targeting highly specialized skills, it might overlook specific areas where AI advancements are significant.

- Potential for Obsolescence: As AI capabilities evolve rapidly, some aspects of GAIA might become less challenging sooner than expected.

In summary, GAIA represents a significant step in AI benchmarking, focusing on a holistic evaluation of AI assistants' capabilities in handling real-world, conceptually simple tasks that are challenging for current AI systems. Its approach marks a shift from the trend of targeting tasks increasingly difficult for humans, aiming instead to evaluate AI systems on tasks that demonstrate a balance of practical applicability and challenging aspects.